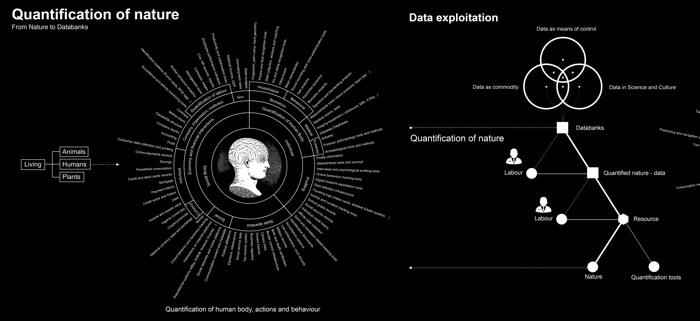

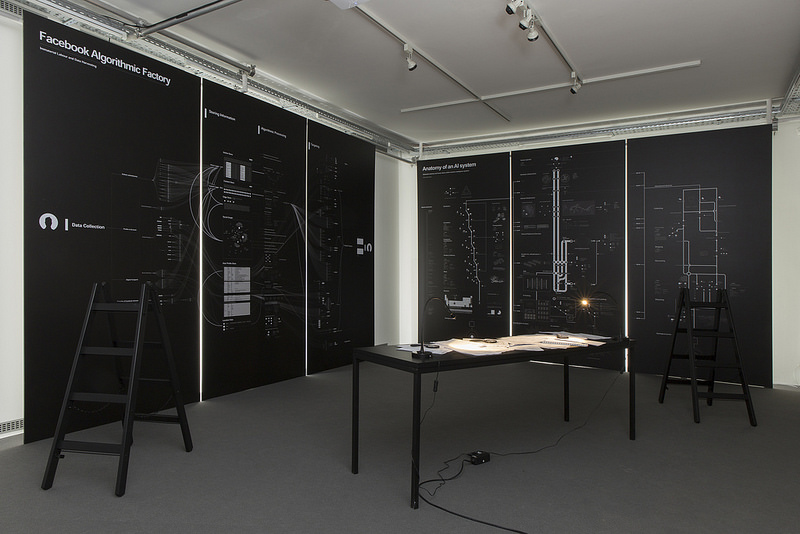

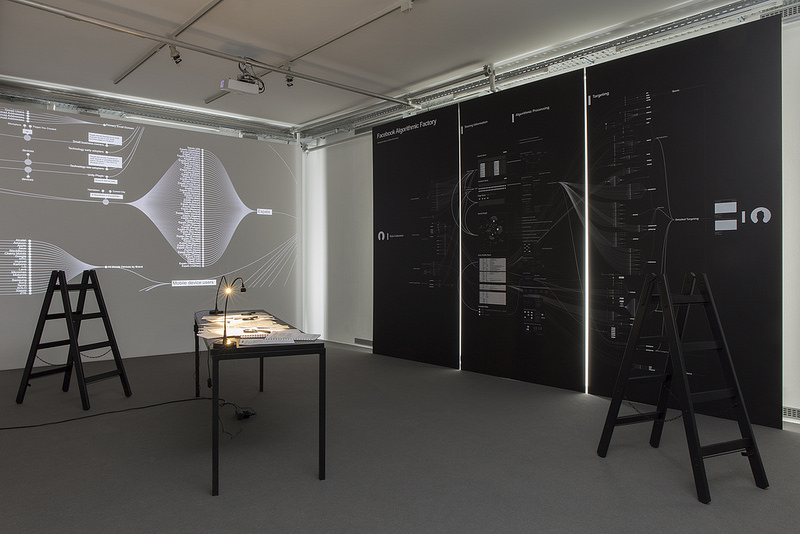

Vladan Joler and Kate Crawford, Anatomy of an AI system (detail)

If you find yourself in Ljubljana this week, don’t miss SHARE Lab. Exploitation Forensics at Aksioma.

The exhibition presents maps and documents that SHARE Lab, a research and data investigation lab based in Serbia, has created over the last few years in order to prize open, analyze and make sense of the black boxes that hide behind our most used platforms and devices.

The research presented at Aksioma focuses on two technologies that modern life increasingly relies on: Facebook and Artificial Intelligence.

The map dissecting the most famous social media ‘service’ might be sober and elegant but the reality it uncovers is everything but pretty. A close look at the elaborate graph reveals exploitation of material and immaterial labour and generation of enormous amounts of wealth with not much redistribution in between (to say the least.) As for the map exploring the deep materiality of AI, it dissects the whole supply chain behind the technology deployed by Alexa and any other ‘smart’ device. From mining to transport and with more exploitation of data, labour, resources in the process.

Should you not find yourself in Ljubljana, then you can still discover the impulses, findings and challenges behind the maps in this video recording of the talk that the leader of the SHARE Lab, Prof. Vladan Joler, gave at Aksioma two weeks ago:

Talk by Vladan Joler at the Aksioma Project Space in Ljubljana on 29 November 2017

In the presentation, Joler talks superpowers of social media and AI invisible infrastructures but he also makes fascinating forays into the quantification of nature, the language of neural networks, accelerating inequality gaps, troll-hunting and issues of surveillance capitalism.

I also took the Aksioma exhibition as an excuse to ask Vladan Joler a few questions:

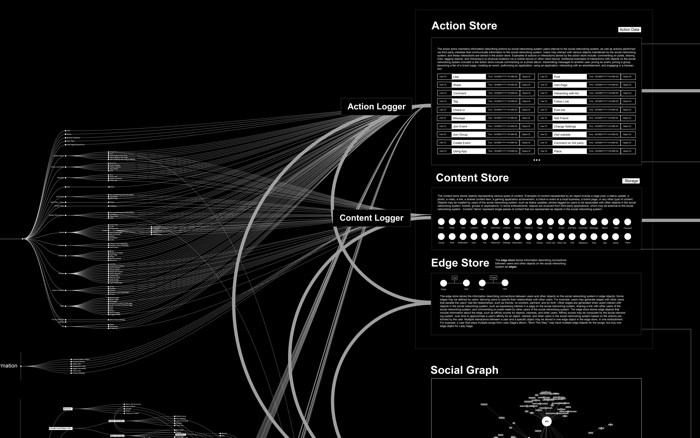

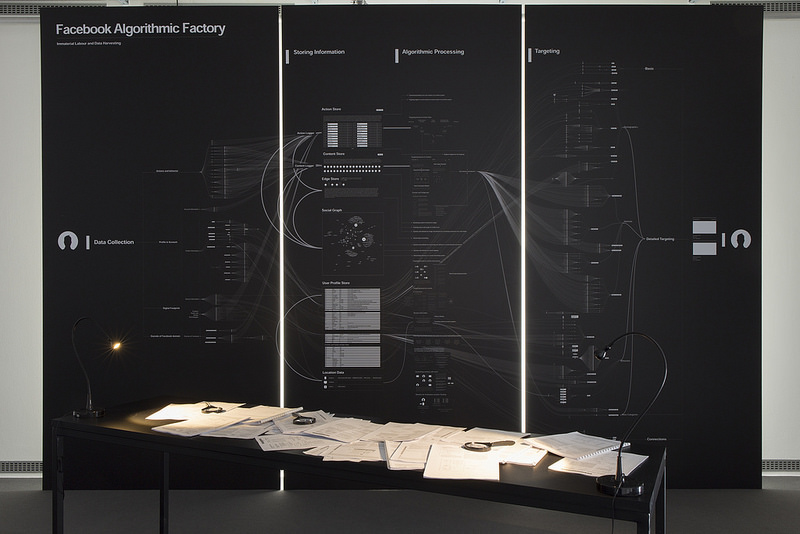

SHARE Lab (Vladan Joler and Andrej Petrovski), Facebook Algorithmic Factory (detail)

Hi Vladan! The Facebook maps and the information that accompanies them on the Share lab website are wonderful but also a bit overwhelming. Is there anything we can do to resist the way our data are used? Is there any way we can still use Facebook while maintaining a bit of privacy, and without being too exploited or targeted by potentially unethical methods? Would you advise us to just cancel our Facebook account? Or is there a kind of medium way?

I have my personal opinion on that, but the issue is that in order to make such a decision each user should be able to understand what happens to their private data, data generated by activity and behaviour and many other types of data that is being collected by such platforms. However, the main problem, and the core reasoning behind our investigations, is that what happens within Facebook for example, i.e. the way it works is something that we can call a black box. The darkness of said boxes is shaped by many different layers of in-transparency. From different forms of invisible infrastructures over the ecosystems of algorithms to many forms of hidden exploitation of human labour, all those dark places are not meant to be seen by us. The only thing that we are allowed to see are the minimalist interfaces and shiny offices where play and leisure meet work. Our investigations are exercises in testing our own capacities as independent researchers to sneak in and put some light on those hidden processes. So the idea is to try and give the users of those platforms more facts so that they are able decide if the price they are paying might be too high in the end. After all, this is a decision that each person should make individually.

Another issue is that, the deeper we were going into those black boxes, the more we became conscious of the fact that our capacities to understand and investigate those systems are extremely limited. Back to your question, personally I don’t believe that there is a middle way, but unfortunately I also don’t believe that there is a simple way out of this. Probably we should try to think about alternative business models and platforms that are not based on surveillance capitalism. We are repeating this mantra about open source, decentralised, community-run platforms, to no real effect.

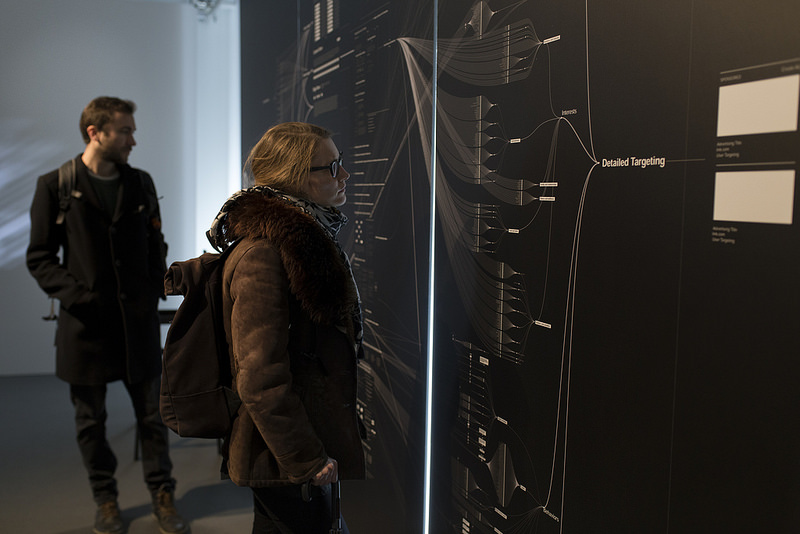

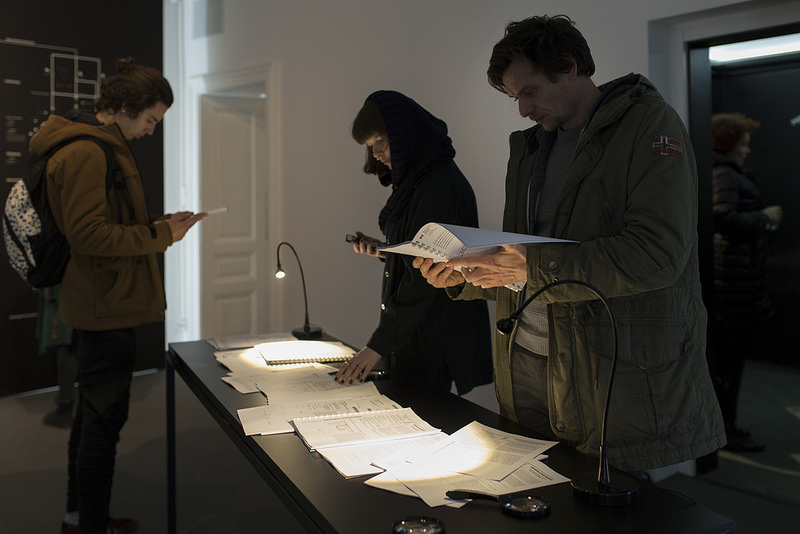

SHARE Lab. Exploitation Forensics at Aksioma Project Space. Photo: Jure Goršič / Aksioma

SHARE Lab. Exploitation Forensics at Aksioma Project Space. Photo: Janez Janša

The other depressing thing is that for many people, Facebook IS the internet. They just don’t care about privacy, privacy belongs in the past and being targeted is great because it means that Facebook is extra fun and useful. Do you think that fighting for privacy is a futile battle? That we should just go with the flow and adapt to this ‘new normal’?

It is interesting to think that privacy already belongs to the past since historically speaking privacy as we understand it today is not an old concept. It is questionable whether we ever had a moment in time when we had properly defined our right to privacy and we were able to defend it. So, from my point of view, it is more of a process of exploration and an urge to define in each moment what privacy means in present time. We should accept the decentralised view on the term privacy and accept that for different cultures this word has a different meaning and not just imply, for example, European view on privacy. Currently, with such a fast development of technology, with the lack of transparency-related tools and methodologies, outdated laws and ineffective bureaucracies, we are left behind in understanding what is really going on behind the walls of leading corporations whose business models are based on surveillance capitalism. Without understanding what is going on behind the walls of the five biggest technology firms (Alphabet, Amazon, Apple, Facebook and Microsoft) we cannot rethink and define what privacy in fact is nowadays.

The dynamics of power on the Web have dramatically changed, and Google and Facebook now have a direct influence over 70% of internet traffic. Our previous investigations are saying that 90% of the websites we investigated have some of the Google cookies embedded. So, they are the Internet today and even more, their power is spilling out of the web into many other segments of our life, from our bedrooms, cars, cities to our bodies.

SHARE Lab. Exploitation Forensics at Aksioma Project Space. Photo: Janez Janša

SHARE Lab. Exploitation Forensics at Aksioma Project Space. Photo: Janez Janša

Could you explain us the title of the show “Exploitation Forensics”?

Oxford dictionary is giving us two main uses of the word exploitation : (1) the action or fact of treating someone unfairly in order to benefit from their work and (2) the action of making use of and benefiting from resources. Basically both versions are essentially related to two maps that are featured in the exhibition. We can read our maps as visualisations of exploitation process regardless whether we speak about exploitation of our immaterial labour (content production and user behaviour as labour) or we go deeper and say that we are not even a workers in that algorithmic factory, but pure, raw material, i.e. a resource (user behavioural data as a resource). Each day users of Facebook provide 300.000.000 hours of unpaid immaterial labour and this labour is transformed into the 18 billion US dollars of revenue each year. We can argue if that is something that we can call exploitation or not, for the simple reason that users use those platforms voluntarily, but for me the key question is do we really have an option to stay out of those systems anymore? For example, our Facebook accounts are checked during visa applications, and the fact that you maybe don’t have a profile can be treated as an anomaly, as a suspicious fact.

Not having profile will place you in a different basket and maybe different price model if you want to get life insurance and for sure, not having Linkedin account if you are applying for a job will lower your chances of getting the job you want. Our options of staying out are more and more limited each day and the social price we are paying to stay out of it is higher and higher.

If our Facebook map is somehow trying to visualise one form of exploitation, the other map that had the unofficial title “networks of metal, sweat and neurons” is visualising basically three crucial forms of exploitation during the birth, life and death of our networked devices. Here we are drawing shapes of exploitation related to different forms of human labour, exploitation of natural resources and exploitation of personal data quantified nature and human made products.

The word forensics is usually used for scientific tests or techniques used in connection with the detection of crime; and we used many different forensic methods in our investigations since my colleague Andrej Petrovski has a degree in cyber forensics. But in this case the use of this word can be treated also as a metaphor. I like to think of black boxes such as Facebook or complex supply chains and hidden exploitations as crime scenes. Crime scenes where different sort of crimes against personal privacy, nature exploitation or let’s say in some broad sense crime against humanity happens.

SHARE Lab. Exploitation Forensics at Aksioma Project Space. Photo: Jure Goršič / Aksioma

SHARE Lab. Exploitation Forensics at Aksioma Project Space. Photo: Jure Goršič / Aksioma

The maps are incredibly sophisticated and detailed. Surely you can’t have assimilated and processed all this data without the help of data crunching algorithms? Could you briefly describe your methodology? How you managed to absorb all this information and turn it into a visualisation that is both clear and visually-appealing?

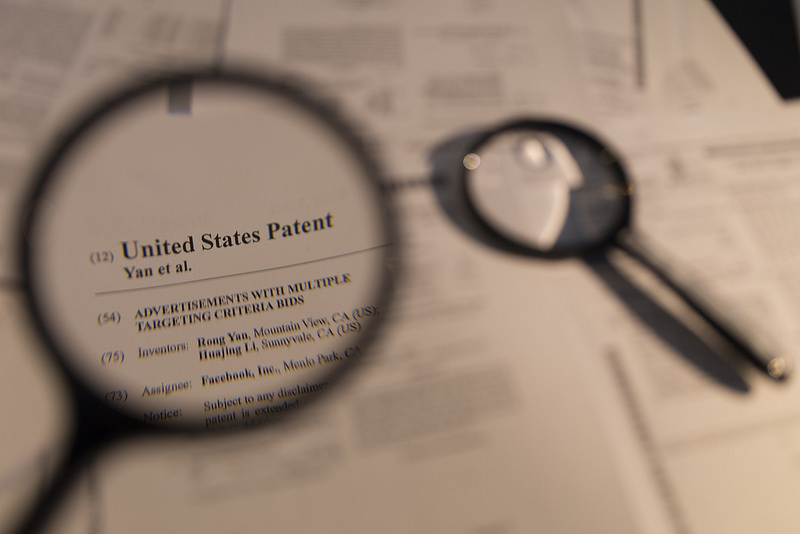

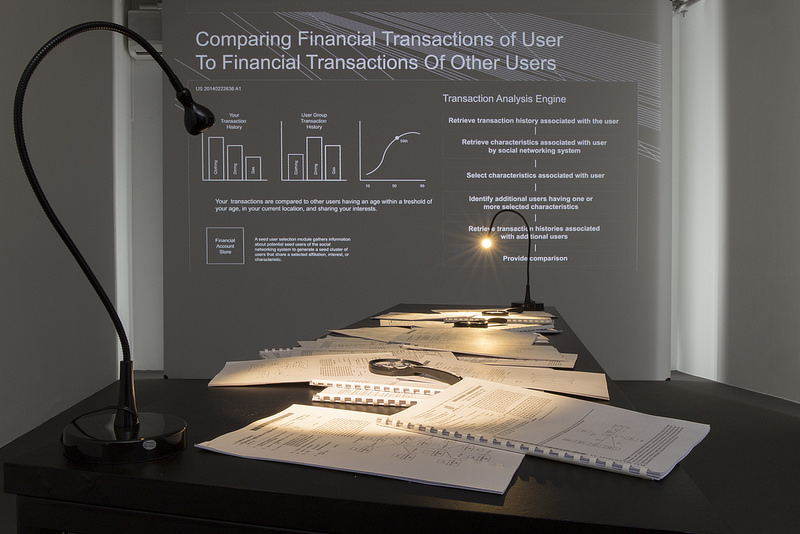

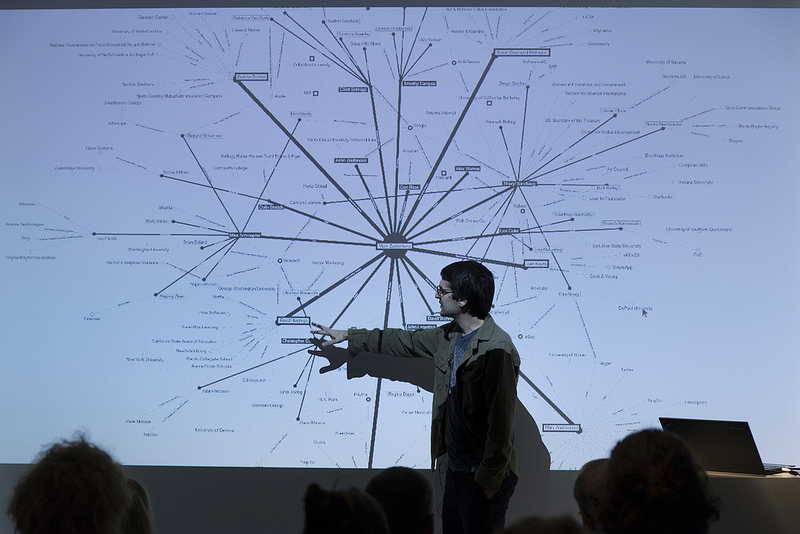

In our previous investigations (eg. Metadata Investigation: Inside Hacking Team or Mapping and quantifying political information warfare) we relied mostly on process of data collection and data analysis, trying to apply different methods of metadata analysis similar to ones that organisations such as the NSA or Facebook probably use to analyse our personal data. For that we used different data collection methods and publicly available tools for data analysis (eg. Gephi, Tableau, Raw Graphs). However, the two maps featured in the exhibition are mostly product of long process of diving and digging into publicly available documentation such as 8000 publicly available patents registered by Facebook, their terms or services documentation and some available reports from regulatory bodies. At the beginning, we wanted to use some data analysis methods, but we very quickly realised that the complexity of data collection operations by Facebook and the number of data points they use is so big that any kind of quantitative analysis would be almost impossible. This tells a lot about our limited capacity to investigate such complex systems. By reading and watching hundreds of patents we were able to find some pieces of this vast mosaic of data exploitation map we were making.

So, those maps, even though they look in some way generative and made by algorithms, they are basically almost drawn by hand. Sometimes it takes months to draw such an complex map, but somehow I need to say that I really appreciate slowness of this process. Doing it manually gives you the time to think about each detail. Those are more cognitive maps based on collected information then data visualizations.

SHARE Lab. Exploitation Forensics at Aksioma Project Space. Photo: Jure Goršič / Aksioma

In a BBC article, you are quoted as saying “If Facebook were a country, it would be bigger than China.” Which reminded me of several news stories that claim that the way the Chinese use the internet is ‘a guide to the future’ (cf. How China is changing the internet) Would you agree with that? Do you think that Facebook might eventually eat up so many apps that we’ll find ourselves in a similar situation, with our lives almost entirely mediated by Facebook?

The unprecedented concentration of wealth within the top five technology companies allows them to treat the whole world of innovation as their outsourced research and development. Anarcho-Capitalist ecosystem of startups is based on a dream that in one moment one of those top five mega companies will acquire them for millions of dollars.

If you just take a look at one of the graphs from our research on “The human fabric of the Facebook Pyramid” mapping the connections within Facebook top management, you will probably realise that through their board of directors they have their feet in most important segments of technological development in combination with political power circles. This new hyper-aristocracy has a power to eat up any new innovation, any attempt that will potentially endanger their monopoly.

The other work in the Aksioma show is Anatomy of an AI system, a map that guides “visitors through the birth, life and death of one networked device, based on a centralized artificial intelligence system, Amazon Alexa, exposing its extended anatomy and various forms of exploitation.” Could you tell us a few words about this map? Does it continue the Facebook research or is it investigating different issues?

Barcelona-based artist Joana Moll infected me with this obsession about materiality of technology. For years we were investigating networks and data flows, trying to visualise and explore different processes within those invisible infrastructures. But then after working with Joana I realised that each of those individual devices we were investigating, has let’s say another dimension of existence, that is related to the process of their birth, life and death.

We started to investigate what Jussi Parikka described as geology of media. In parallel with that, our previous investigations had a lot to do with issues of digital labour, beautifully explained in works of Christian Fuchs and other authors, and this brought us to investigate the complex supply chains and labour exploitation in the proces.

Finally, together with Kate Crawford from AI Now Institute, we started to develop a map that is a combination of all those aspects in one story. The result is a map of the extended anatomy of one AI based device, in this case Amazon Echo. This anatomy goes really deep, from the process of exploitation of the metals embedded in those devices, over the different layers of production process, hidden labour, fractal supply chains, internet infrastructures, black boxes of neural networks, process of data exploitation to the death of those devices. This map basically combines and visualises three forms of exploitation: exploitation of human labour, exploitation of material resources and exploitation of quantified nature or we can say exploitation of data sets. This map is still in beta version and it is a first step towards something that we are calling in this moment – AI Atlas that should be developed together with AI Now institute during next year.

Do you plan to build up an atlas with more maps over time? By looking at other social media giants? Do you have new targets in view? Other tech companies you’d like to dissect in the way you did Facebook?

The idea of an Atlas as a form is there from the beginnings of our investigations when we explored different forms of networks and invisible infrastructures. The problem is that the deeper our investigations went, those maps became more and more complex and grew in size. For example, maps exhibited at Aksioma are 4×3 m in size and still there are parts of the maps that are on the edge of readability. Complexity, scale and materiality of those maps became somehow a burden itself. For the moment there are two main forms of materialisations of our research. First, the main form are stories on our website and recently those big printed maps are starting to have their life at different gallery spaces around. It is just recently that our work was exhibited in art context and I need to say that I kind of enjoy in that new turn.

SHARE Lab. Exploitation Forensics at Aksioma Project Space. Photo: Janez Janša

SHARE Lab. Exploitation Forensics at Aksioma Project Space. Photo: Janez Janša

How are you exhibiting the maps in the gallery? Do you accompany the prints with information texts or videos that contextualize the maps?

Yes. As You mentioned before, those maps are somewhat overwhelming, complex and not so easy to understand. On the website we have stories, narratives that guide the readers through the complexities of those black boxes. But at the exhibitions we need to use different methods to help viewers navigate and understand those complex issues. Katarzyna Szymielewicz from Panoptykon Foundation, created video narrative that is accompanying our Facebook map and we are usually exhibiting a pile of printed Facebook patents, so visitors can explore them by themselves.

Thanks Vladan!

SHARE Lab. Exploitation Forensics is at Aksioma | Project Space in Ljubljana until 15 December 2017.

Previously: Critical investigation into the politics of the interface. An interview with Joana Moll and Uberworked and Underpaid: How Workers Are Disrupting the Digital Economy.