Born and raised in Silicon Valley, Douglas Edric Stanley has been working for ten years in France as artist, theoretician and researcher in Paris and Aix-en-Provence. He is currently Professor of Digital Arts at the Aix-en-Provence School of Art where he teaches programming, interactivity, networks and robotics. He has taught workshops on the production of code-based art and has shown his work at digital art exhibitions and festivals around the world. He’s also a researcher at the laboratory LOEIL in Aix-en-Provence and a PHD candidate of the Laboratory for Interactive Aesthetics at the University of Paris 8 where he explores the evolution of artistic creation in relation to the algorithmisation of the world.

Born and raised in Silicon Valley, Douglas Edric Stanley has been working for ten years in France as artist, theoretician and researcher in Paris and Aix-en-Provence. He is currently Professor of Digital Arts at the Aix-en-Provence School of Art where he teaches programming, interactivity, networks and robotics. He has taught workshops on the production of code-based art and has shown his work at digital art exhibitions and festivals around the world. He’s also a researcher at the laboratory LOEIL in Aix-en-Provence and a PHD candidate of the Laboratory for Interactive Aesthetics at the University of Paris 8 where he explores the evolution of artistic creation in relation to the algorithmisation of the world.

A couple of months ago Douglas Edric Stanley invited me to give a talk at the Ecole Supérieure d’Art d’Aix-en-provence. After having exchanged a few emails with him and seen what he was doing in the School, i felt like he would be a perfect interviewee: not only is his work interesting but he also explains clearly and simply notions and dynamics that so far sounded too complicated (it’s very gratifying for a non-nerd like me to finally understand all that without much intellectual effort.) Best of all, he doesn’t mind saying what he really thinks.

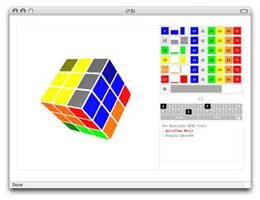

The interview is somewhat longer than usual. I didn’t feel like cutting or doing any kind of editing. It makes a great read and is full of surprising insights and rather bold statements about the (sad) state of new media art in France, making music with your Rubik’s Cube, where tangible computing is going to, what it really means to be a “new media artist” and show your stuff at prestigious festival, etc.

Can you tell us something about the abstractmachine research projects you developed for ZeroOne San Jose?

First, a conceptual response. The abstractmachine is just that — a research project, a platform even — and not an artwork, per se. One of the theoretical hypotheses I have been exploring over the past decade, and which has recently transformed itself almost into a sortof manifesto, surrounds the idea of an emerging epistême in which modularity reigns, where objects are seized by constantly shifting rules and conditions, and programmable machines create not only a new aesthetic, but even ask the question anew, “what is aesthetics?”

Often we talk about “interactivity”, and I might even be a specialist on that subject, at least I probably was once (cf. http://www.abstractmachine.net/lexique). But over time I have come to realize that interactivity is only the tip of the iceberg, and that the idea of building endless gadgets that go BOING! in the dark when you step on them is absolute vanity. At the same time I loathe the contemporary art world’s smug distain for these same gadgets, their incapacity to see the emerging field within these often simple, almost childlike objects and installations. So I’m trying to evolve the gadget without throwing out the charm of what takes it beyond its pure gadget status.

Contemporaneous with my own personal evolution on this subject, I can see other artists around me making a similar move away from specific interactive objects as an end-all, and the emergence of a culture of software, instruments, and platforms for artistic creation. Along with my students I have even created a sort of moral compass which evolves on the following scale: reactive -> automatic -> interactive -> instrument -> platform. So the abstractmachine project is trying to make that move, to practice what we preach: from the reactive or interactive object, into forms of instruments and platforms; i.e. following the idea that we are dealing with something much larger than “tools” here (an unfortunate term), and in fact something more than even gadgets.

Now with that preamble out of the way, let me describe concretely what that means for the ZeroOne Festival. I have proposed four “terminals”. There will be a terminal for making music, a terminal for making games, and two terminals dedicated to algorithmic cinema. These terminals are linked to online “emulators”, allowing people to use the abstractmachine either online or in a physical context. If all goes well, in San Jose people will be able to access the physical terminals, whereas online people will use the emulators. The experience will not be the same, but they will have advantages one in relation to the other.

While these works address the idea of programming and physical access to algorithms, I’m not interested big massive tentacular interfaces hooked up to cellular automata, modular robotic structures, or massive neural networks linked to some wacky biometric swimsuit. “Algorithm” does not equal complicated mumbo-jumbo. All of the terminals from the abstractmachine are simple, using simple interfaces: a Rubik’s Cube for making music, a Gameboy for making games, a Lego webcam for making movies coupled with touchable surface for exploring them. These machines all allow people to play with these media and objects/images/sounds algorithmically. The logic is often that of a puzzle, a toy, a mosaic, while being at the same time a very simple — but effective — form of computer programming. These are real algorithms, but manipulated via simple objects and gestures.

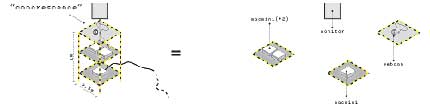

Diagram of Concrescence

Diagram of Concrescence

The shift to interactivity was historically a profound and sensible one, but what came with it — the algorithms behind all that interactivity — was the real shift. But what is an algorithm? Can you hold it? Does it look like anything? Can anyone play with it? The answer is of course yes. Over the years, I’ve come to realize that this added supplement is actually quite simple, and can be accessed by anyone. In fact, there is nothing really ontologically all that different — at least from my perspective — between programming a game and playing it. The developers have just cut us off from the compiler, but we still have a relationship with the source code which we read by playing the game. When you play a game you are just de-programming it, exploring how it was built in reverse. You touch the code, you play it, you put it to work. So why not make that process more apparent to the users? We must absolutely start making the process palpable, and more importantly, palatable.

The most obvious example of how I try to do this is also the most fun. It’s called ^3, or simply cubed. The idea is simple: take a Rubik’s Cube and make a musical composition instrument out of it. Each face on the cube is a separate instrument, and the colors represent the notes on that instrument. The speeds of each instrument/face, as well as their volume are based on how you position the cube. Each face is played in a loop, just like any other basic electronic music sequencer, and by manipulating the cube you manipulate the sequencer.

The beauty of electronic music sequencers is that the loops are so short that the act of composition (laying down the notes) gets confused with the act of performing those notes. If you’re good enough, you can switch the notes between two loops, and end up turning a compositional sequencer into a note-for-note musical instrument. So while ^3 is clearly a musical sequencer — something designed for making modular musical structures — it can also, with a little panache, be used as a full-scale live musical instrument.

The program is free if you use it online, and interface with any MIDI compatible instrument/software. Of course if you come down to the show in San Jose you’ll be able to compose using a real-live cube, and even Bring Your Own Rubik’s Cube — hence the latest moniker: BYORC music. I’m just waiting for some goofy speedcubing DJ to interface it into an insane breakcore patch and go apeshit with it. Add to that the visual dimension, where you’re modifying your composition algorithmically on the fly, in front of a crowd with a real-live Rubik’s Cube, and you’ve got quite a show.

The idea came to me while watching an Autechre concert. The sound system sucked, and the only goop that filtered through came from the massive looping rhythmic structures. I watched them building these structures, and all I could make out were these little flashing lights running across some rhythm sequencer with adjustable knobs. I suppose there was Max/MSP in there somewhere — they’re famous for it — but this big linear sequencer really seemed to have a central importance in the construction of the music. Those sequencers are fun, but is this really where we’re going with electronic music? Humanity has been at it for over 50 years, and the best we can come up with as an ideal electronic instrument is a 303 Groovebox ¡#@?¿! So I got to thinking of something that would be fun, visual, simple by design, but insanely difficult for the musician to master. Permutate one face and you’ve just fucked up your perfect rhythm on the others. Each rhythmic and melodic structure gets tied up in a web that can only be mastered by learning the simple but difficult-to-memorize algorithms of the Rubik’s Cube. It’s a sort of acid-test for electronic music nerds. Let’s finally get those knob-twiddlers onto some really-fucking-difficult instruments!

Don’t miss the video!

Why this interest for the Rubik cube? Fond childhood memories?

Actually not. I sucked at the Rubik’s Cube, and couldn’t solve it. My siblings knew how to solve the cube, as did many of my geek friends. And as I grew up in Silicon Valley, right in the heart of the emerging home computer/BBS/video game phenomena, there were indeed quite a few future nerds hanging around who knew how to solve the cube. I had no such talents. I suffered a similar fate when it came to programming. It wasn’t until I was studying at the Collège International de Philosophie that I started to realize the ontological importance of these machines and finally got to work figuring them out. So I had to move to Paris — and study philosophy of all things — to understand where I came from, and why things like Simon and the Rubik’s Cube and Pacman were all part of an emerging culture of the algorithm that I had grown up with.

But even those who grew up in the middle of nowhere know the Rubik’s Cube, it is not just a nerd phenomenon. So it’s got a tinge of 80’s nostalgia, sure, but it’s mostly about finding the most well-known interfaces for playing with algorithms, and turning those into instruments.

You work on programming, interactivity, networks and robotics. That looks like a lot of quite different disciplines to me. Do you agree? How can you keep up with the fast developments of each one?

It’s actually a lot of work just keeping up with it all — each of these axes moves so fast (perhaps with the exception of interactivity) — and indeed I’m becoming a bit tired trying to follow all these fronts. I gave myself ten years to get a grasp on what’s going on. Those ten years are more or less up, and it’s time to move on.

Light on the Net and Telegarden

Light on the Net and Telegarden

That said, these subjects are in fact tied intimately together. To think that you could specialize in interactive graphics without

following the evolution of the networks building up around us, is absurd. Meanwhile these interactive visual programs are moving into daily objects (= why design is so important) and these objects are becoming more and more the modular Graphical User Interface that we formerly only knew via computer screens. So robotics, or at least electronics, rather than being separate fields, become in fact the corollaries to what’s happening within the screen, and in fact a sort of interface with the networked graphical world. Network + image + robotics + electronics are becoming more and more confused with one another, starting from the Masaki Fujihata‘s Light on the Net on the one hand, and Ken Goldberg’s Telegarden on the other, and moving on from there. These will eventually lead to algorithmic botanical structures and growable dynamic media architectures and stuff we don’t even really understand yet. Add to that the modular biological epistême courageously explored by The Critical Art Ensemble, Preemptive Media, Eduardo Kac, Joe Davis, etc, and you’ve got a lot of convergence — oops, “Transvergence” — going on.

You can also add a new category to the above list: along with programming and networks and robotics I am currently adding 3D modeling to my arsenal, with a new project to build a commercial video game. This is also one of the reasons I’m really excited about the introduction of Processing into my atelier in Aix-en-Provence. I’ve always wanted to program in OpenGL with the students, but it was too hard for them to get

started. In Processing, working in DDD is pretty much the same as working in DD. It’s actually quite interesting for me to finally have an environment that explicitly addresses this multifaceted way of thinking/working. With Processing we finally have an environment where we can move back and forth from text to images to graphics to motors to models to rapid prototyping machines to sensors and so on. As much as I loved Macromedia Director and hated Flash because I couldn’t plug it into anything, I have to admit that Director has really lost that edge of being able to plug into anything, which is essentially what we do in Aix-en-Provence. And you just can’t beat a bunch of motivated artists working inside of an open-source context. So the software and hardware platforms are also moving in this direction of hybrid objects, just like my Curriculum Vitae. The interactive widget really wants to “get physical“.

So to go back to the list, the current interests are: programming, interactivity, networks, robotics, simulation, and video games. Along with robotics, it is pretty clear by now that there is something happening in video games — even if they still suffer from everything interactive art suffers from. But unlike interactive art, video games are starting to poke their heads out from under the surface, and I want to explore that. Sony and Microsoft are still totally oblivious, but Nintendo seems to be on to something. I have yet to get my hands on the Wii controller, but if it lives up to its promise, we’ve definitely taken a step forward in interactivity. And we will have taken a further step into mixing all these fields up.

Can you give us a glimpse of your research about the evolution of artistic creation in relation to the algorithmisation of the world?

Basically, I am interested in the algorithmic nature of the computer, in distinction from its digital or computational aspect. When we talk of algorithms, we are talking about the process of the computer, how it can order tasks and activities and adjust itself to incoming data.

While the word “algorithm” sounds complicated, it’s actually a very simple idea. It’s the part of the computer that actually lets you *do stuff* and knows how to *do stuff* by itself. You give it some instructions (the program) and it will do stuff with those instructions. If you format things in a way that the computer can understand, it will be able to do stuff with it. Doing stuff with stuff, that’s what algorithms are all about. So you can call it programming, but you can also call it algorithms, which allows you to step back from the nitty-gritty messy computer code stuff, and just look at the dynamic, modular nature of these machines, from a more theoretical aspect. And from this point of view, the world is slowly being formatted in such a way that anything and everything can enter into algorithmic processes – so that everything and anything can be manipulated by automatic machines.

This in fact ties in with the previous response. I believe that we can now see that robotics is slowly rendering physical the algorithms that we formerly only knew on-screen, that’s why I sometimes speak of the “physicalization” of algorithms, which is different from a materialization which would suggest a more natural process. Robotics is a sort of Application Programming Interface to the physical world, a way of making the physical world accessible to arrays, loops, pointers, boolean operators and subroutines. A few years ago, I went out to get research funding here in France around the idea of robotics as being the next GUI. I called that project “Object-Oriented Objects”. The screen is often just a simulation window for something that wants to be physical, and when you see everything that’s going on with rapid prototyping this intuition appears to (literally) be fleshing out. The infinite modularity of the computer does not just stop at the screen, at folders, or copy-paste media. This modularity is very powerful, and goes far beyond the screen. Obviously a lot of this has already been explored by the Media Lab crowd — they’re the real precursors on this front. That said, I don’t think we’ve explored the theoretical implications of this transformation enough. I still see sensors and actuators : stuff that captures data, and stuff that makes noise and moves other stuff. These two activities should ultimately collapse onto one another : capturing data = moving stuff; moving stuff = capturing data. Hence the proposal for the Rubik’s Cube as a musical instrument: here’s an algorithm, it’s physical, you can touch it, it makes music, and all of these things mean the same thing.

Asymptote, Honorary Mention at the Prix Ars Electronica 2000

Asymptote, Honorary Mention at the Prix Ars Electronica 2000

How do you explain what you’re doing to someone who has never heard of interactive art or code-based art?

Interactive art is *really* hard to describe, because you often have to wade through all the apriori of “user control” or “freedom of choice“, and so on. Often, when I show my algorithmic cinema platform, people ask me: are the images just chosen at random? When I answer, no, there is a program proposing new images based on what you do, they reply: “oh, so it’s pre-programmed”. I.e. for most people, “interactivity” is opposed to “programmed”, which is of course totally absurd when you think about it. What they are truly talking about are complex metaphysical concepts of chance, fate, predestination, and thus time. Interactivity is a far more specific phenomenon, but has all these theoretical apriori mixed in, even for those with no explicit grasp on these concepts.

Ironically enough, when it comes to code-based art, it’s a lot easier. You just need to know who you’re talking to, and adapt it to them. In the end, these concepts are not all that difficult.

Let me give you an example using one of my favorite data structures, the “array”. When you program a computer, you often use this thing called an array. An array is something that mostly holds numbers, and looks something like this:

list_of_numbers = {1, 2, 3, 64, 63, 4, 16, 61, 33, 22} ;

The interesting thing in programming is that this list of numbers could be used for all kinds of stuff. This list, for example, could be a list of numbers that describe the musical notes in a composition. If you can imagine a piano, now imagine that you have assigned a number to each note — note 1 would be the note at the far left, note two the note next to it, and so on. When you play the notes in order, you get a little solfege: {1, 2, 3, 4, 5, 6} = “Do, Re, Mi, Fa, So, La”. An array could hold that list of notes, and be able to play them in order to make a little song.

Arrays are also used to hold lists of characters, i.e. text. When users read what I am saying on your website, they will, in fact, be reading an array. Of course they won’t be saying to themselves, “what a boring array”, for all they will see is the text. But the computer will see it as an array. Each letter in the array is represented inside the computer with a number : A = 65, B = 66, C = 67, …, a = 97, b = 98, c = 99, and so on. So when I write “Hello”, in my word processor, I am just filling up an array with a bunch of numbers, i.e. 72, 101, 108, 108, 111. So while your readers will see “Hello”, their computers will see a list of numbers: 72, 101, 108, 108, 111.

Now imagine that you’re playing Super Mario Bros. way back in 1985. Since Super Mario Bros. is drawn flat, from the side — i.e. in two dimensions — you could use the above list to describe all the objects Mario has to jump over as he walks along the path. 1 = mushroom, 2 = brick, 3 = hole, 4 = pipe, and so on. As Mario walks along his path, the computer looks in the list, sees the number in the array and draws the right object in that place. Or you could use it in a game like Moon Patrol from 1982. If you were programming Moon Patrol you could use this array thing to draw the potholes in the ground, or how high the mountains are supposed to be in the background.

70’s synthesizer and SimTunes by Toshio Iwai

70’s synthesizer and SimTunes by Toshio Iwai

Now imagine that you’re a creative guy like Toshio Iwai, and you also know how to program. Someone like Toshio knows all about arrays and things of the sort, and he knows that you can actually use them to do BOTH: i.e. to draw the graphics and to make the music. You can use the same technical structure to do both things. Suddenly drawing = making music, which is a brilliant move. Toshio of course didn’t invent this idea, but from a programing perspective he really turned it into an art form. He understood how the same programming structures could be applied to many different uses. From the perspective of the computer, its just a list of numbers. But for the programmer it’s one of the basic things you need to make something like music, or to draw certain types of pictures. What happens when you conflate those two uses? What happens if you switch them, or plug the output of one into the input of the other? It’s like patch cords on an old 70’s synthesizer, only here the computer can turn text into sounds into images back into sounds into whatever. This is why I talk about abstract machines, because the data is getting ontologically transformed as it passes through the circuitry. So knowing how to mix and match all that stuff is what renders different ideas functional.

Now, what interests me, and why I’m so interested in code-based art, is watching how people move from one concept to the next, and how previously illogical compatibilities become logical and compatible. At some point, it becomes what Gilles Deleuze says about questions – it’s more important to pay attention to the question than to the answers they generate. For me it is less about the results or what answers people are looking for in their code (i.e. the “goal” of the program), and more about how they got there, what kind of questions they asked, and what “problems” are formulated within the question itself. The answer, for Deleuze, is secondary to what was formulated inside of the question. In the same sense, I am interested in what sort of algorithms, what sort of code structures are at work inside the attempt to make such and such a program.

You can often see the evolution of these structures at play within an artist’s work over a period of time. I’m not really looking at the code as an end in-and-of-itself, but rather as the key to a way of thinking. If you’re clear about that way of thinking, then anyone who’s paying attention should be able to understand.

You have developed several applications for the Abstract Machine Hypertable, do you plan to work on new ones? Are you still excited by that interface?

You have developed several applications for the Abstract Machine Hypertable, do you plan to work on new ones? Are you still excited by that interface?

The Hypertable was originally designed for a single application: Concrescence, my

platform for making algorithmic cinema. It was not designed as point-and-click interface for your hands. It had a very specific approach to a very old dream: being able to touch and manipulate images with your hands. Many important artists have come before me : Myron Krueger, Masaki Fujihata, Jean-Louis Boissier, Diller + Scofidio, Michael Naimark, Christa Sommerer & Laurent Mignonneau. Many technologists and companies could also be mentioned. The list is endless. And recently, two developments have totally changed the future of hypertables and hypersurfaces: Jeff Han’s Multi-touch Interface video, and Apple’s buyout of Fingerworks.

Jeff Han starts from a video analysis system very similar to mine, but adds an important twist which gives him the ability to actually know which fingers touch the surface, and thus manipulate objects on the surface, much as you might do with a computer mouse only with all your fingers. My hypertable was never designed for this, and Jeff’s solution is totally ‘Airwolf‘ so it will probably become the basis for a lot of future work. Like many technologies, he wasn’t the first to develop this idea, there is even a previous product out there using different technology with similar results. But Jeff’s system has just that right “holy shit!” factor that has everyone excited about the possibilities of interactive surfaces.

The other development is Apple’s future iPod, which is obviously designed for huge impact, but unfortunately will be a war waged with patents. We probably won’t have access to this new system. In fact they are already facing a lawsuit on this front, meaning all of us who have been making designs with similar technologies need to be very afraid. But outside of the political and economic ramifications of Apple’s solution, I think there is an important paradigm shift, especially when you read Apple’s specific patent request on “Gestures for Touch Sensitive Input Devices”. The shift is in the idea of reversibility, which goes back to my previous comments on robotics as the next GUI. Apple is turning the screen into a low resolution camera by placing sensors next to each red, green, and blue light combination that makes up the LCD screen. The screen IS the camera. Rather than having a one-way surface, as we currently do now, the Apple patent seeks something akin to David Cronenberg’s image of the erotic television set from Videodrome: you can see it and it can see you. Reversibility is at work everywhere in programmable machines, but here we have a very tangible (pun intended) example of this transformation at work. In fact you can take this concept — reversibility — and apply it to many of the emerging technologies, for it is one of the new ways of thinking, fundamental to the new epistême.

Going back to the Hypertable, I really see it as a specific design, which was based more on the idea of a hand’s “presence” than that of its gesture. Put your hand on the table and things grow around it. What I wanted was something to allow you to grow cinematic sequences around your hands, and not something that would allow you — Minority Report style — to manipulate images like toys. I wanted something subtle and I think I got it. And once I got it, I figured I was done and started moving on to the next project.

But something interesting happened along the way. My assistant at the time, Pierre-Erick Lefebvre (a.k.a. Jankenpopp), got very excited about the musical possibilities of the hypertable, and proposed finding new uses for it. At the same time I had several commercial propositions, most of which were bogus, or didn’t understand the way my system worked. So I decided to explore these two directions, albeit selectively: commercial design on the one hand, experimental musical instrument on the other. On the experimental front, I directed a workshop at the Postgrade Nouveaux Médias at the Haute Ecole d’Arts Appliqués in Geneva, where Pierre-Erick and I worked with a small group of their students to find new uses for the Hypertable. As the whole system was designed to be easily programmable, we ended up with a half-dozen propositions in just under four days of work. The first day, in fact, was occupied by reviewing how to program a computer.

Based on the experiences in that workshop, I asked Pierre-Erick to bring together a musical group which eventually became 8=8. 8 =8 = 4 programmers * 2 hands = 4 musicians * 2 hands = etc. All of our programs are music-generating interactive visual surfaces, the idea being that we generate our own visual musical environments rather than using pre-baked software. All the programs are images that generate sound rather than the other way around. We had our second performance, this time at the Scopitone Festival in Nantes, on June 1st and 2nd of this year.

So oddly enough, a very specific technology, designed for a very specific use, has actually been twisted and turned into all sorts of different uses. Fortunately I design everything these days as a platform, even when it is intended for a specific purpose. Everything is repurpose-able and a lot of my code and designs get recycled into other people’s work, mostly via my Atelier Hypermedia in Aix-en-Provence. So who knows, perhaps the Hypertable will have yet again another permutation into something else. For me, it is just further proof that if you design it as a modular object, i.e. as a platform and not a gadget, the uses will more or less discover themselves.

You’ve lived, worked and taught in France for a long time now. What do you think of the new media art scene in that country?

Nothing less than an absolute catastrophe. As attached as I am to the French language, culture, and thought, I couldn’t be in worse company when it comes to understanding what is at work in new media, and don’t even dare to talk about French new media art. Just look at the Dorkbot map, where’s France on that map? It’s like a big hole. The French don’t understand things like Dorkbot, even if there probably are a few potential dorkbotters here and there. To give another example: a little over a year ago, there was an important conference on code-based art at the Sorbonne. The French speakers were totally out of touch, especially when you juxtaposed them to the run.me crowd, Transmediale, or the live coders. Sure, we have Antoine Schmitt, but that’s just one artist, and he’s coming from a very different place than the live coder scene. Run.me was on its second or third year, and Transmediale had already distributed several year’s-worth of awards to code-based art, while the French were just beginning to scratch their heads, “Hmmm, what’s this?”

(continue reading the interview of Douglas Edric Stanley)