AI Snake Oil. What Artificial Intelligence Can Do, What It Can’t, and How to Tell the Difference, by Arvind Narayanan, a professor of computer science at Princeton and the director of the Center for Information Technology Policy, and Sayash Kapoor, a computer science Ph.D. candidate at Princeton University’s Center for Information Technology Policy. The book will be published by Princeton University Press on the 24th of September, but you can already read the intro online.

Finally! A book on AI that doesn’t make me want to slit my wrists out of despair or tedium. A book that is didactic without being obvious nor basic, critical of the many flaws and limits of technology while remaining optimistic about how AI might improve. It’s a serious book but its authors have humour. In reference to the total absence of consensus about what is and isn’t AI, they define AI as “whatever hasn’t been done yet.” I also like the writing style, its clarity and the odd memorable formula: “Unlike Predictive AI, which is dangerous because it doesn’t work, AI for image classification is dangerous precisely because it works so well.”

Since AI is an umbrella term for a set of loosely related technologies, Kapoor and Narayanan chose to focus on specific types of AI: predictive AI, generative AI and content moderation AI. With a small incursion into the merry world of paperclips and artificial general intelligence to evaluate (and dismiss) the claim that a rogue AI would constitute an existential risk for humanity.

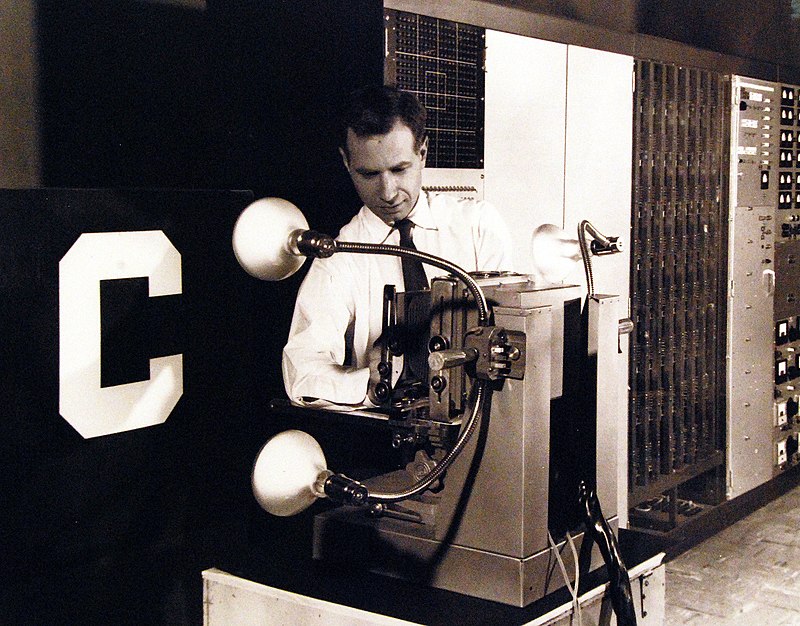

The Mark 1 Perceptron, being adjusted by Charles Wightman (Mark I Perceptron project engineer). Photo

Stop ShotStopper. Photo found on Organizing my Thoughts

AI Snake Oil is a guide aimed at helping us distinguish between hype and genuine progress, between reality and promises, fiction and feasible future. Narayanan and Kapoor believe that, if we want to influence how AI is being implemented, we need to be able to assess the plausibility of purported advances. They explain how the various types of AI work, how the history of AI research unfolded, and they examine cases of past failures to identify where the technology lets us down. More often than not, it seems that fixing or mitigating a problem is not a question of more data and better models. It’s a question of citizens, researchers, unions, media and policymakers developing a deeper understanding of AI and demanding a fairer, more robust and transparent technology.

Both Kapoor and Narayanan might be computer scientists but that doesn’t prevent them from seeing where humans fall (journalists who do not have the time, competence or means to do rigorous investigative work before publishing an article about AI, for example), where humans push back by being unpredictable or simply by being lucky and what humans can do to resist the automation of every aspect of their individual and social existence.

The authors of the book give some encouraging examples of such resistance: the modern labour movement that followed the early years of the Industrial Revolution; Karla Ortiz who fights against Generative AI appropriation of the creative labour of artists, photographers and writers who get neither credit nor compensation in exchange for their content; an Indian non profit startup that, in contrast with AI projects that rely on globally distributed precarious work, pays 20 to 30 times above the local minimum wage and allows workers of data annotation to retain ownership of the data they create.

We already knew that not leaving AI should not be left in the hands of the people who are currently controlling its development and implementations, AI Snake Oil demonstrates why that would be dangerous and what we can do collectively to regain some control over the technology.

Related book reviews: BLACK BOX CARTOGRAPHY. A critical cartography of the Internet and beyond, Invention and Innovation. A Brief History of Hype and Failure, AI in the Wild. Sustainability in the Age of Artificial Intelligence, etc.