DocLab Expo: Uncharted Rituals. Exhibition view in de Brakke Grond, part of the International Documentary Film festival Amsterdam. Photo Nichon Glerum

Jonathan Harris exhibition in de Brakke Grond, part of the International Documentary Film festival Amsterdam. Photo Nichon Glerum

The 11th edition of IDFA DocLab closed on Sunday at De Brakke Grond in Amsterdam. An integral part of the International Documentary Film Festival Amsterdam (IDFA), DocLab looks at how contemporary artists, designers, filmmakers and other creators use technology to devise and pioneer new forms of documentary storytelling. It’s a space for debates, conversations, VR experiences, interactive experiments and workshops.

For some reason, i thought that this year’s programme was even more intense than in previous years and i’m going to need 3 blog posts to cover all the ideas and projects i found particularly interesting. There will be one story summing up the notes i took during the DocLab: Interactive Conference. Another post will briefly comment on some of the interactive documentaries i saw in Amsterdam and back home. And today, i’d like to look at a couple of installations that explore the main theme of the festival: Uncharted Rituals or how we have to constantly, subtly and often unknowingly adjust our behaviour and mindset to technology. Instead of the other way round.

Robots and computers are acting more and more like people. They’re driving around in cars, hooking us up with new lovers and talking to us out of the blue. But is the opposite also true— are people acting more and more like robots?

The computers may think so: addicted to our phones, caught in virtual filter bubbles and dependent on just a handful of tech companies, people are acting more and more predictably. The breakthrough of artificial intelligence and immersive media doesn’t just pose the question of what technology does to us, but also what we do with this technology.

I have only 3 works to submit to you today but each of them makes valuable comments about the way we might one day have to dance with and around technology in order to coexist with it:

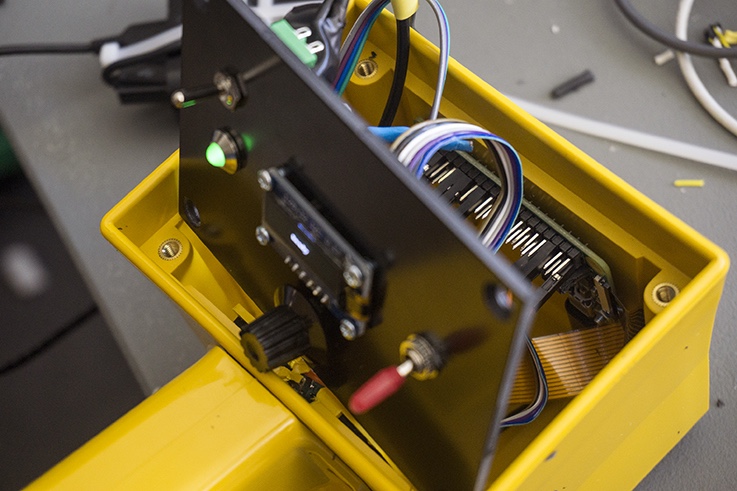

Max Pinckers and Dries Depoorter, Trophy Camera v0.9, 2017

Max Pinckers and Dries Depoorter, Trophy Camera v0.9, 2017

Max Pinckers and Dries Depoorter, Trophy Camera v0.9 at DocLab Expo: Uncharted Rituals. Exhibition view in de Brakke Grond, part of the International Documentary Film festival Amsterdam. Photo Nichon Glerum

A photographic image is never objective. It is always framed by human aesthetic choices, agendas and conscious or unconscious bias. The Trophy Camera v0.9 aggregates this element of human subjectivity into a photo camera that can only make award-winning pictures.

The AI-powered camera, developed by photographer Max Pinckers and media artist (and DocLab Academy alumnus) Dries Depoorter, has been trained by all the photos that have won an award at the World Press Photo competition, from 1955 to the present.

Based on the identification of labeled patterns, the experimental device is programmed to identify, shoot and save only images that it predicts have at least a 90% chance of winning the competition. These photos are then automatically uploaded to a dedicated website: trophy.camera. I tried several times but my photos were never deemed award-worthy by the camera.

Burhan Ozbilici, WPP of the year 2017

and its trophy.camera version?

The work reminded me of the World Press Photo awards of 2011 when Michael Wolf won an honorary mention in Contemporary Issues with a photo he made by placing a camera on a tripod in front of a computer screen running Google Street View. The award raised a heated debate among photographers. For some of them, Wolf didn’t take the pictures, the cameras on Google street car automatically did it. This is therefore not photojournalism. And yet, who would have paid attention to these scenes if Wolf hadn’t recognized and framed them?

Trophy Camera v0.9 is tongue-in-cheek and irreverent but it points to a future when algorithms will win prizes that have traditionally recognized human creativity and vision.

Google, Microsoft and other tech companies are fighting over patents for the smart glasses that scan the environment and layer information over it.

One company owns the rights to scanning common hand gestures, while another holds a patent on helping you to cross the road. Patent Alert exposes the patenting obstacles that will intrude on our experiences with augmented reality headsets once the technology becomes mainstream.

Sander Veenhof created a HoloLens app that uses a cloud-based Computer Vision library to analyse your surrounding and warn you about gestures and behaviours that are not allowed because they are covered by a patent that’s not owned by the supplier of the device you are wearing.

Memo Akten, Learning to see: Hello World! [WIP R&D 3]

Memo Akten, Learning to See: Hello World! at DocLab Expo: Uncharted Rituals. Exhibition view in de Brakke Grond, part of the International Documentary Film festival Amsterdam. Photo Nichon Glerum

Memo Akten‘s Learning to See series of works uses Machine Learning algorithms to reflect on how we make sense of the world and consequently distort it, influenced by our expectations.

One of the investigations in the series, Hello, World!, explores the process of learning and understanding developed by a deep neural network “opening its eyes for the first time.”

The neural network starts off completely blank. It will learn by looking for patterns in what it’s seeing. Over time, the system will build up a database of similarities and connections and use it to make predictions of the future.

Interestingly, Akten’s description of the learning process holds a mirror back to us: But the network is training in realtime, it’s constantly learning, and updating its ‘filters’ and ‘weights’, to try and improve its compressor, to find more optimal and compact internal representations, to build a more ‘universal world-view’ upon which it can hope to reconstruct future experiences. Unfortunately though, the network also ‘forgets’. When too much new information comes in, and it doesn’t re-encounter past experiences, it slowly loses those filters and representations required to reconstruct those past experiences.

How far can we go when we draw parallels between the way a computer trains itself and the way we learn? Are humans the only ones who are capable of turning learning into understanding? Or will computers beat us at that too one day? But perhaps more crucially, can computers help us see and oppose our own cognitive biases?