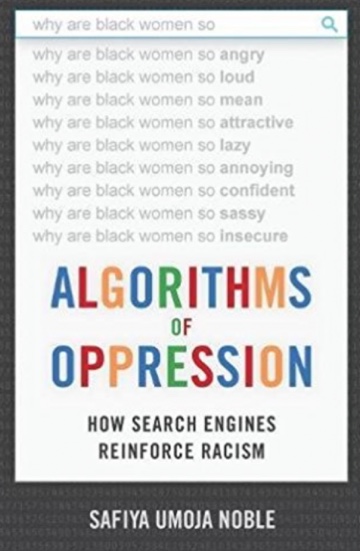

Algorithms of Oppression. How Search Engines Reinforce Racism, by Dr. Safiya Umoja Noble, a co-founder of the Information Ethics & Equity Institute and assistant professor at the faculty of the University of Southern California Annenberg School of Communication.

Algorithms of Oppression. How Search Engines Reinforce Racism, by Dr. Safiya Umoja Noble, a co-founder of the Information Ethics & Equity Institute and assistant professor at the faculty of the University of Southern California Annenberg School of Communication.

Publisher NYU Press writes: Run a Google search for “black girls”—what will you find? “Big Booty” and other sexually explicit terms are likely to come up as top search terms. But, if you type in “white girls,” the results are radically different. The suggested porn sites and un-moderated discussions about “why black women are so sassy” or “why black women are so angry” presents a disturbing portrait of black womanhood in modern society. In Algorithms of Oppression, Safiya Umoja Noble challenges the idea that search engines like Google offer an equal playing field for all forms of ideas, identities, and activities. Data discrimination is a real social problem; Noble argues that the combination of private interests in promoting certain sites, along with the monopoly status of a relatively small number of Internet search engines, leads to a biased set of search algorithms that privilege whiteness and discriminate against people of color, specifically women of color.

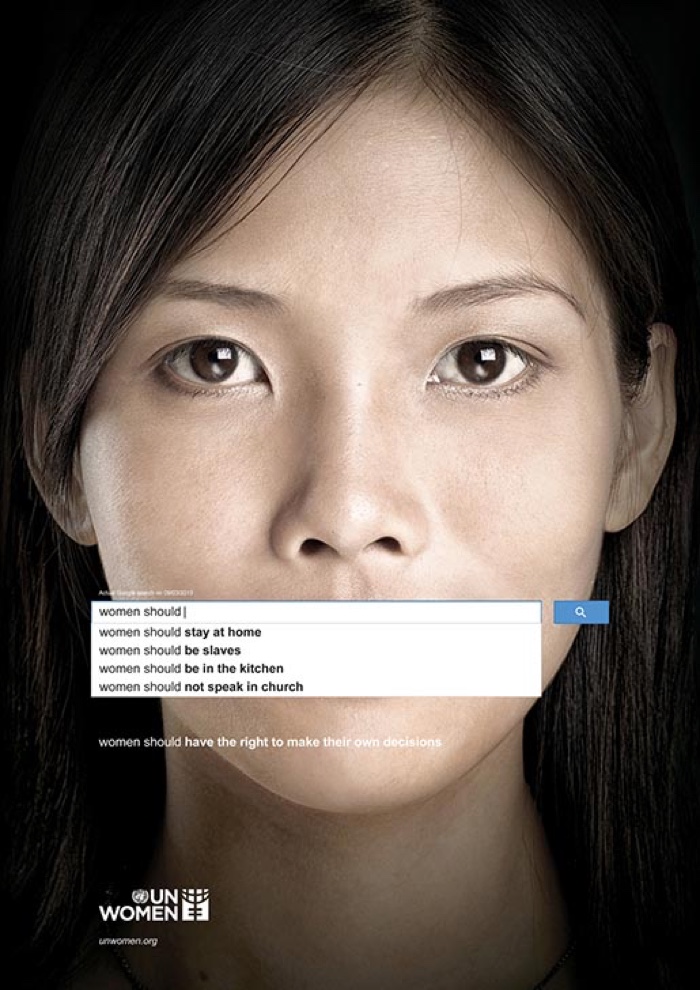

UN Women campaign, 2013. Credit: Memac Ogilvy & Mather Dubai

UN Women campaign, 2013. Credit: Memac Ogilvy & Mather Dubai

Back in 2009, Safiya Umoja Noble googled the words “black girls.” To her horror, the search yielded mostly pornographic results. A similar quest for “white girls” gave out far less demeaning content. The lewd results from that google search are far less prominent nowadays but this doesn’t mean that Noble’s inquiry into how race and gender are embedded into google’s search engine has lost its purpose. Google, her book demonstrates, is still a world in which the white male gaze prevails.

The author sets the stage for her critique of corporate information control by debunking the many myths and illusions that surround internet. She explains that, no, the Google search engine is neither neutral nor objective; yes, Google does bear some responsibility in its search results, they are not purely computer-generated; and no, Google is not a service, a public information resource, like a library or a school.

Google is not the ideal candidate for the title of ‘greatest purveyor of critical information infused with a historical and contextual meaning.’ First, Google might claim that it is an inclusive company, but its diversity scorecard proves otherwise. While it is slowly improving, it’s still nothing worth shouting over the rooftops about. And it’s not just Google, a similar lack of diversity can be observed all over Silicon Valley.

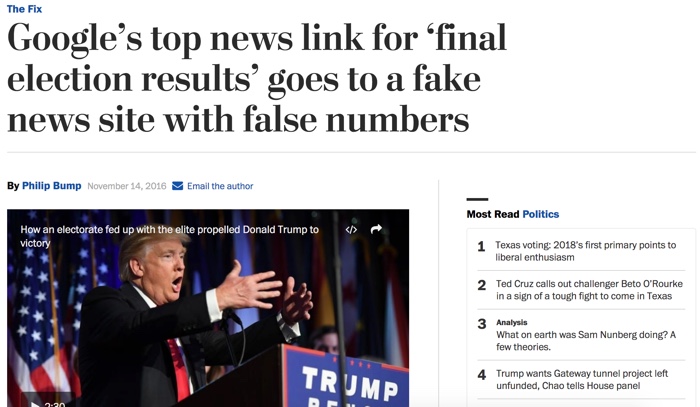

The Washington Post, November 14, 2016

Another reason why we shouldn’t trust Google to provide us with credible, accurate and neutral information is that its main concern is advertising, not informing. That’s why we should be very worried. While public institutions such as universities, schools, libraries, archive and other memory spaces are loosing state funding (the book focuses on the USA but Europe isn’t a paradise either in that respect), private corporations and their black-boxed information-sorting tools are taking over and gaining greater control over information and thus over the representation of cultural groups or individuals.

Noble ties in these concerns about technology with a few observations regarding the sociopolitical atmosphere in her country: disingenuous ideologies of ‘colourblindness’, the rise of “journalism” that courts clicks and advertising traffic rather than quality in its reporting, a head of state known for his affinities with white supremacy and disinformation and a climate characterized by hostility towards unions, movements such as Black Lives Matter.

What makes Algorithms of Oppression. How Search Engines Reinforce Racism particularly interesting is that its author doesn’t stop at criticism, she also suggests a few steps that we (the internet users), Google, its Sili Valley ilk and the government should take in order to achieve an information system that doesn’t reinforce current systems of domination over vulnerable communities.

Noble strongly calls for public policies that protect the rights to fair representation online. This would start with a regulation of techno giants like Google that would prevent it from holding a monopoly over information.

She also urges tech companies to hire more women, more black people or more Latinos to diversify their tech workforce, but also to bring in critically-minded people who are experts in black studies, ethnic studies, American Indian studies, gender and women’s studies and Asian American studies as well as other graduates who have a deep understanding of history and critical theory.

Noble also encourages internet users to ask themselves more often how the information they have found has emerged and what its social and historical context might be.

Finally, the author suggests that non profit and public research funding should be dedicated to explore alternatives to commercial information platforms. These services wouldn’t be dependent on advertising and would pay closer attention to the circulation of patently false or harmful information.

Algorithms of Oppression is a powerful, passionate and thought-provoking publication. It build on previous research (such as Cathy O’Neil’s book Weapons of Math Destruction) but it also asks new questions informed by a black feminist lens. And while Noble’s book focuses on Google, much of her observations and lessons could be applied to many of the tech corporations that mediate our everyday hyper-connected life.