As the title of this post implies, i was in Amsterdam on Sunday for the DocLab Interactive Conference, part of the Immersive Reality program of the famous documentary festival.

James George at the DocLab Interactive Conference. Photo by Nichon Glerum

James George at the DocLab Interactive Conference. Photo by Nichon Glerum

The conference (ridiculously interesting and accompanied by an exhibition i wish i could see all over again but more about all that next week) looked at how practitioners redefine the documentary genre in the digital age. In his talk, artist James George presented artistic projects that demonstrate how fast computational photography is evolving. Most of the project he commented on were new to me but more importantly, once they were stitched together, they formed a picture of how innovations are changing our relation to the essence, authorship and even definition of the image. Here are the notes i took during his fast and efficient slideshow of artistic works:

Erik Kessels, 24hrs of Photos

Erik Kessels, 24hrs of Photos

Erik Kessels printed out every photo uploaded on Flickr over a 24-hour period. Visitors of the show could literally drown into a sea of images.

The work, commented George, functions more as data visualization than as a photo installation.

Penelope Umbrico, Suns (From Sunsets) from Flickr, 2006-ongoing. Installation view, SF MoMA

Penelope Umbrico, Suns (From Sunsets) from Flickr, 2006-ongoing. Installation view, SF MoMA

In 2006, Penelope Umbrico searched for ‘sunset’ on Flickr back. She then printed the 541,795 matches and assembled them into one wall-size collage of photographs. She said. “I take the sheer quantity of images online as a collective archive that represents us – a constantly changing auto-portrait.”

With 9 Eyes ongoing work, Jon Rafman shows that you don’t need to be a photographer to create photos. The artist spent hours pouring over google street view to spot the inadvertently eerie or poetic sights captured by the nine lenses of the Google Street View camera cars.

Clement Valle, Postcards from Google Earth

Clement Valle, Postcards from Google Earth

Clement Valle fortuitously discovered broken images on Google Earth. The glitches are the result of the constant and automated data collection handled by computer algorithms. In these “competing visual inputs”, the 3D modellings of Earth’s surfaces fail to align with the corresponding aerial photography.

Google Earth is a database disguised as a photographic representation. These uncanny images focus our attention on that process itself, and the network of algorithms, computers, storage systems, automated cameras, maps, pilots, engineers, photographers, surveyors and map-makers that generate them.

Teehan+Lax Labs, Google Street View Hyperlapse

Teehan and Lax created a tool that taps into Street View imagery and pulls it together to create an animated tour. Pick the start and end points on Google Maps and Hyperlapse stitches together a rolling scene of Street View imagery as if you were driving the GSV car.

Staro Sajmište

Staro Sajmište

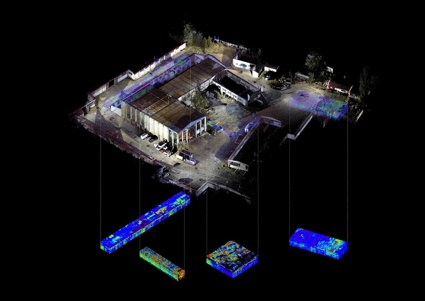

Living Death Camp, by Forensic Architecture and ScanLAB, combines terrestrial laser scanning with ground penetrating radar to dissect the layers of life and evidence at two concentration camp sites in former Yugoslavia.

But how about the camera? When is the camera of the future going to emerge? What is it going to be like? It will probably be more similar to a database than to an image. In his keynote speech concluding the Vimeo Festival + Awards in 2010, Bruce Sterling described his prediction of the future of imaging technology. For him a camera of the future may function as follows: “It simply absorbs every photon that touches it from any angle. And then in order to take a picture I simply tell the system to calculate what that picture would have looked like from that angle at that moment. I just send it as a computational problem out in to the cloud wirelessly.”

In mid-2005, New York City MTA commissioned a weapon manufacturer to make a futuristic anti-terror surveillance system. The images were to be fed directly into computers, watched by algorithm and alerts would be sent automatically when danger was detected. However, the system was plagued by “an array of technical setbacks”, the system failed all the tests and the whole project ended in lawsuits. Thousands of security cameras in the New York subway stations now sit unused.

One month later after Sterling’s talk, Microsoft released Kinect. The video game controller uses a depth sensing camera and computer vision software to sense the movements and position of the player. Visualizations of space as seen through Kinect’s sensors can be computed from any angle using 3D software. James George and Collaboration with Alexander Porter decided to explore the artistic use of the surveillance and kinect technologies. “We soldered together an inverter and motorcycle batteries to run the laptop and Kinect sensor on the go. We attached a Canon 5D DSLR to the sensor and plugged it in to a laptop. The entire kit went into a backpack.

We spent an evening in the New York Union Square subway capturing high resolution stills and and archiving depth data of pedestrians. We wrote an openFrameworks application to combine the data, allowing us to place fragments of the two dimensional images into three dimensional space, navigate through the resulting environment and render the output.”

The OS image capture system, which uses the Microsoft Kinect camera paired with a DSLR video camera, creates 3D models of the subjects in video that can be re-photographed from any angle virtually.

James George, Jonathan Minard, and Alexander Porter, CLOUDS

James George, Jonathan Minard, and Alexander Porter, CLOUDS

George and Porter later worked with Jonathan Minard and used the technology again for CLOUDS, an interview series with artists and programmers discussing the way digital culture is changing creative practices.

Sophie Kahn

Sophie Kahn

New and old media collide in Sophie Kahn‘s work. The artist uses a precise 3D laser scanner designed for static object to create sculptures of human heads and bodies. Because a body is always in flux, the technology receives conflicting spatial co-ordinates and generates irregular results.

Marshmallow Laser Feast, MEMEX | Duologue

Marshmallow Laser Feast‘s Memex is a “3D study of mortality exploring new photographic processes, in this case photogrammetry“.

MLF worked with a 94-camera high resolution scanning rig, to create the full body scan of an old lady and explore what filmmaking for the virtual-reality environment could be like.

Introducing the Source Filmmaker

Source Filmmakers, produced by Valve, is a tool to create movies inside the Source game engine. George finds their work relevant to his own practice because although Valve comes from a video game culture, they investigate the same ideas.

Naked scene from Beyond: Two Souls (image)

Naked scene from Beyond: Two Souls (image)

Beyond: Two Souls, by Quantic Dream, is an interactive drama action-adventure video game for PlayStation 3. At some point in the game, character Jodie Holmes (played by Ellen Page) is taking a shower. All in a perfectly politically correct fashion.

After the release of the game, nude images of Jodie Holmes leaked online, and were published by several gaming blogs. The “nude photos” were a result of hacking into the files of a debug version of the game and manipulating the camera. The game’s publisher, Sony Entertainment, got these posts taken down. “The images are from an illegally hacked console and are very damaging for Ellen Page,” the rep reportedly told one site. “It’s not actually her body. I would really appreciate if you can take the story down to end the cycle of discussion around this.”

But if the nude images were “not actually her body,” how could they be “very damaging” to the actress? Whether or not the answer to this question is a convincing one, the little scandal shows the kind of challenge that filmmaker will have to face when dealing with this kind of hyper realistic technology.

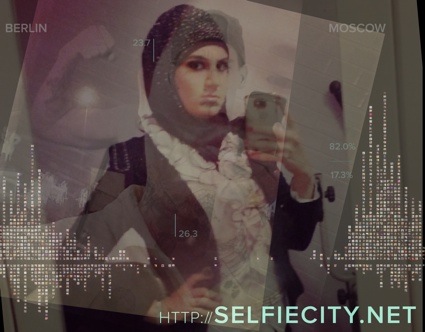

Selfiecity by Lev Manovich and Moritz Stefaner analyzes 3,200 selfies taken in several metropoles around the world and looks at them under theoretic, artistic and quantitative lenses.

Selfiecity by Lev Manovich and Moritz Stefaner analyzes 3,200 selfies taken in several metropoles around the world and looks at them under theoretic, artistic and quantitative lenses.

DocLab Immersive Reality is accompanied by an exhibition featuring Virtual Reality projects, web documentaries, apps and interactive artworks. The show remains open until the end of the month at The Flemish Arts Centre De Brakke Grond in Amsterdam.