Eye Catcher

Eye Catcher

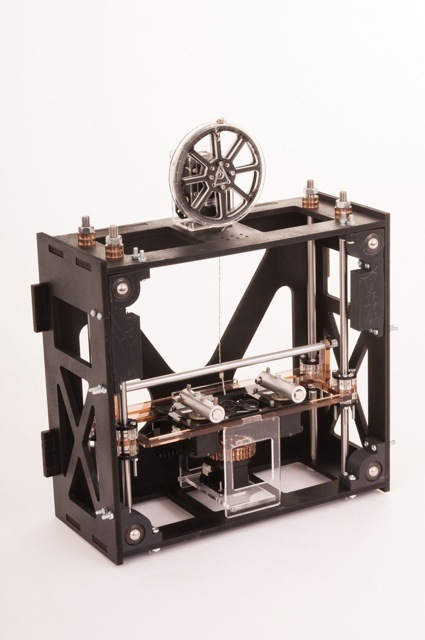

Behind the Wall a UR Robot running IAL’s own “Scorpion” Software puppeteers the frame

Behind the Wall a UR Robot running IAL’s own “Scorpion” Software puppeteers the frame

A few weeks ago, i visited the graduation show of The interactive Architecture Lab, a research group and Masters Programme at the Bartlett School of Architecture headed by Ruairi Glynn, Christopher Leung and William Bondin. And it was, just like last year (remember the Candy Cloud Machine and the architectural creatures that behave like slime mould?), packed with very good surprises. I’ll report on a couple of them in the coming days.

I’ll start nice and easy today with the Eye Catcher, by Lin Zhang and Ran Xie, because if you’ve missed the work at the Bartlett show, you’ll get another chance to discover it from tomorrow on at the Kinetica Art Fair in London.

The most banal-looking wooden frame takes thus a life of its own as soon as you come near it. It quickly positions itself in front of you, spots your eyes and starts expressing ’emotions’ based on your own. Eye Catcher uses the arm of an industrial robot, high power magnets, a hidden pinhole camera, ferrofluid and emotion recognition algorithms to explore novel interactive interfaces based on the mimicry and exchange of expressions.

A few words with Lin Zhang:

Hi Lin! I think what i like about the frame is that it is so discreet and unassuming. You can pass by it and not even notice it. So why did you chose to make it so quiet and ‘normal’ looking?

Yes exactly, it’s a really normal static object, which exists in everyone’s daily life, so the magic happens the moment it begins to move. I was inspired by my tutor‘s art work finding “life in motion” – not all motion can provide wonder and pleasure in the observer, but playing with the perception of animacy in objects often does. There are many digital interfaces that have the appearance of advanced technologies and compete for our attention, but I think it is better to develop interfaces that rather than standing out, can sit within our normal daily lives and then come to life at the right moment whether for functional or playful purposes.

Magnetic Puppeteer that manipulates the frame from behind the wall

Magnetic Puppeteer that manipulates the frame from behind the wall

How does the frame respond to and communicate emotions? How does it work?

To start with, the height of passers-by is calculated by ultrasonic Sensors embedded in the ceiling. This is remapped to the robotic arm (controlled using the Lab’s opensource controller Scorpion) hidden behind the wall which magnetically drives the frame to align “face to face” with onlookers. A wireless pinhole camera in the frame transmits the video footage of onlookers back to our software (built in Processing and using face-OSC) which analyses 12 values of facial expression such as width of the mouth, the height of the eye-brow, the height of eye-ball etc. That information then drives the reciprocal expressions of the frames fluid “eyes”, controlled by four servo/magnets manipulating ferrofluid.

Do you see The Eye Catcher is mainly a work that aims to entertain and amuse or is there something else behind the work? Some novel interfaces, interactions or mechanisms you wanted to explore?

The Eye Catcher project is a method to examine my research question, which is to explore the possibilities for building non-verbal interaction between observers and objects through mimicry of specific anthropomorphic characteristics. It asks to what extend can such mimicry be deployed, specifically utilising eye-like stimuli, for establishing novel expressive interactive interfaces. We found that humans perceive dots, specifically eye-like stimuli, automatically as almost a hardwired ability, which develops at a very early stage of human life. By the age of 2 months, infants show a preference for looking at the eyes over the rest of regions of the face, and by the age of 4 months, they get the ability to discriminate between direct and averted gaze. Therefore, the eye is the foundation of human interaction upon which we build more complex social interactions.

Eye Catcher moves along the wall to approach a visitor

Eye Catcher moves along the wall to approach a visitor

Ferrofluid “Eyes” puppeteered magnetically

Ferrofluid “Eyes” puppeteered magnetically

What was the biggest challenge(s) you encountered while developing the work?

The biggest challenge is how to make the frame and two dots more animate – to not appear robotic but rather more natural. So we were really exploring how long reactions should take, how to select a suitable behaviour in response to peoples expressions, and how to provide continual unpredictable interaction to keep observers’ attention.There’s still a lot of questions to be explored, and even though its only ultimately 2 dots we’re animating, the limitations are a useful constraint to work within.

Will you modify or upgrade The Eye Catcher for Kinetica?

Yes, we’re working on it now for Kinetica Art Fair. We’ve already built a new frame that moves faster and more quietly. We’ve updated it with new Wi-Fi camera which provides more reliable facial recognition and smoother behaviour on the wall. The film you’ve seen is really only a prototype so its exciting to see how the new iteration will perform. We’ve switched round some behaviour too, to see how the public reacts. For example, at Kinetica we’ve programmed it to prefer to interact with children which should get them excited when it drops down to see them. In the future we’d like to build a more permanent piece using a 2 axis rail system rather than a robot arm. In theory the frame could then work on a much longer wall which would allow all sorts of new types of interaction.

Thanks Lin!

Check out the Eye Catcher at the KINETICA ART FAIR on 16th – 19th October 2014 at the Old Truman Brewery in London.

The project also references works such as Omnivisu, Opto-Isolator and All eyes on you.