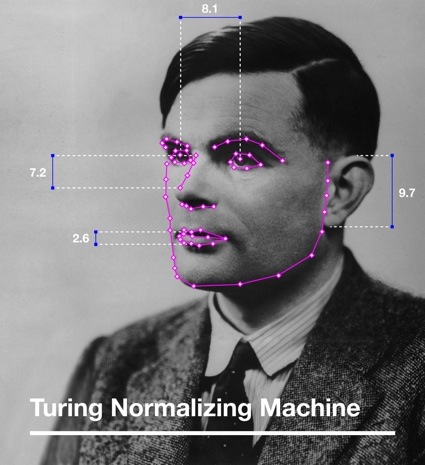

Mushon Zer-Aviv and Yonatan Ben-Simhon, The Turing Normalizing Machine, 2013. Image courtesy of the artists

Mushon Zer-Aviv and Yonatan Ben-Simhon, The Turing Normalizing Machine, 2013. Image courtesy of the artists

Alan Turing was a mathematician, a logician, a cryptanalyst, and a computer scientist (as i’m sure you all know.) During World War 2 he cracked the Nazi Enigma code, and later came to be regarded as the father of computer science and artificial intelligence. In the 1952, Turing was convicted of having committed criminal acts of homosexuality. Given a choice between imprisonment and chemical castration, Turing chose to undergo a medical treatment that made him impotent and caused gynaecomastia. Suffering from the effects of the treatment and from being regarded as abnormal by a society, the scientist committed suicide in June 1954.

Inspired by Turing’s life and research, Mushon Zer-Aviv and Yonatan Ben-Simhon have devised a machine that attempts to answer a question which, at first, might seem baffling: “Who is normal?”

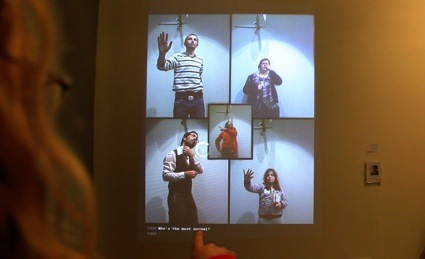

The Turing Normalizing Machine is an experimental research in machine-learning that identifies and analyzes the concept of social normalcy. Each participant is presented with a video line up of 4 previously recorded participants and is asked to point out the most normal-looking of the 4. The person selected is examined by the machine and is added to its algorithmically constructed image of normalcy. The kind participant’s video is then added as a new entry on the database.

(…)

Conducted and presented as a scientific experiment TNM challenges the participants to consider the outrageous proposition of algorithmic prejudice. The responses range from fear and outrage to laughter and ridicule, and finally to the alarming realization that we are set on a path towards wide systemic prejudice ironically initiated by its victim, Turing.

Mushon Zer-Aviv and Yonatan Ben-Simhon, The Turing Normalizing Machine, 2013

I found out about the TNM the other day while reading the latest issue of the always excellent Neural magazine. I immediately contacted Mushon Zer-Aviv to get more information about the work:

Hi Mushon! What has the machine learnt so far? Are patterns emerging of what people find ‘normal? such as an individual who smiles or one who is dressed in a conservative way? What is the model of normality at this stage?

TNM ran first as a pilot version in The Bloomfield Museum of Science in Jerusalem as a part of the ‘Other Lives‘ exhibition curated by Maayan Sheleff. Jerusalem is a perfect environment for this experiment as it is a divided city with multiple ethnical, cultural and religious groups practically hating each other’s guts. The external characteristics of these communities are quite distinguishable as well, from dress code to tone of skin and color of hair. While the Turing Normalizing Machine has not arrived at a single canonical model of normality yet (and possibly never will) some patterns have definitely emerged and are already worth discussing. For example, the bewilderment of a religious Jewish woman trying to choose the most normal out of 4 Palestinian children.

The machine does not construct a model of normality per-se. To better explain how the prejudice algorithm works, consider the Google Page-Rank algorithm. When a participant chooses one of the random 4 profiles presented before them as ‘most normal’, that profile moves up the normalcy rank while the others are moved down. At the same time, if a profile is considered especially normal, it would make the choice made by its owner more influential on the rank than others, and vice versa.

We are currently working on the second phase of the experiment that analyzes and visualizes the network graph generated by the data collected in the first installment. We’re actually looking to collaborate with others on that part of the work.

Usually society doesn’t get to decide what is good or even normal for society. The decision often comes from ‘the top’. If ever such algorithm to determine normality was ever applied, could we trust people to help decide who looks normal or who isn’t?

While I agree that top-down role models influence the image of what’s considered normal or abnormal, it is the wider society who absorbs, approves and propagates these ideas. Whether we like it or not, such algorithms are already used and are integrated into our daily lives. It happens when Twitter’s algorithms suggests who we should follow, when Amazon’s algorithms offers what we should consume, when OkCupid’s algorithms tells us who we should date, and when Facebook’s algorithms feeds us what it believes we would ‘like’.

What inspired you to come up with an experiment in algorithmic prejudice?

This experiment is inspired by the life and work of British mathematician Alan Turing, a WW2 hero, the father of computer science and the pioneering thinker behind the quest for Artificial Intelligence. Specifically we were interested in Turing’s tragic life story, with his open homosexuality leading to his prosecution, castration, depression and death. Some, studying Turing’s legacy, see his attraction to AI and his attempts to challenge the concept of intelligence, awareness and humanness, as partly influenced by his frustration with the systematic prejudice that marked him ‘abnormal’. Through the Turing Normalizing Machine we argue that the technologies Turing was hoping would one day free us from the darker and irrational parts of our humanity are today often used to amplify it.

The video of the work explains that “the results of the research can be applied to a wide range of fields and applications.” Could you give some examples of that? In politics for example (i’m asking about politics because the video illustrated the idea with images of Silvio Berlusconi)?

Berlusconi is a symbol of the unholy union between media and politics and it embodies the disconnect between what people know about their leaders (corruption, scandals, lies…) and what people see in their leaders (identification, pride, nationalism, populism…). A machine could never decipher Berlusconi’s success with the Italian voter, it needs to learn what Italians see in him to get a better picture of the political reality.

Another obvious example is security, and especially the controversial practice of racial profiling. My brother used to work for EL AL airport security and was instructed to screen passengers by external characteristics as cues for normalcy or abnormalcy. Here again we already see technology stepping in to amplify our prejudice based decision making processes. Simply Google ‘Project Hostile Intent’ And you’ll see that scientific research into algorithmic prejudice is already underway and has been for quite some time.

Mushon Zer-Aviv and Yonatan Ben-Simhon, The Turing Normalizing Machine, 2013. Image courtesy of the artists

Mushon Zer-Aviv and Yonatan Ben-Simhon, The Turing Normalizing Machine, 2013. Image courtesy of the artists

Mushon Zer-Aviv and Yonatan Ben-Simhon, The Turing Normalizing Machine, 2013. Image courtesy of the artists

Mushon Zer-Aviv and Yonatan Ben-Simhon, The Turing Normalizing Machine, 2013. Image courtesy of the artists

How does the system work?

The participant is presented with 4 video portraits and is requested to point at the one who looks the most normal of the 4. Meanwhile, a camera identifies the pointing gesture, records the participant’s portrait, and analyzes the video (using face recognition algorithms among other technologies). The video portrait is then added to the database and is presented to the next participant to be selected as normal or not. The database saves the videos, the selections and other analytical metadata to develop its algorithmic model of social normalcy.

Any upcoming show or presentation of the TNM?

There are some in the pipeline, but none that I can share at this point. We are definitely looking forward to more opportunities to install and present TNM, as in every community it brings up different discussions about physical appearance, social normalcy and otherness. Beyond that, we want the system to challenge its model of prejudice based on its encounter with different communities with different social values, biases and norms. Otherwise, it would be ignorant, and we wouldn’t want that now, do we?

Thanks Mushon!