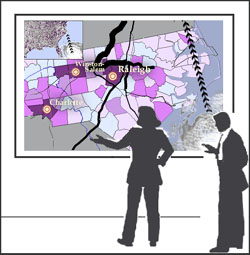

In a scene of Minority Report, Tom Cruise stands in front of a screen and gestures to manipulate data and run analyses.

At Pennsylvania State University, researchers are working on something similar, by combining GIS (geographic information system), natural language technology, cognitive engineering and gestural science.

The system, called Dialogue Assisted Visual Environment for GeoInformation (DAVE_G) is meant to help people work together better while making decisions as a crisis unfolds. It can recognize gestures in conjunction with dialog and interpret the meaning.

“Spatial concepts are vague for computers,” explains professor Alan MacEachren. “When a user tells a computer a location is ‘near,’ ‘between’ or ‘north of’ something, it has trouble interpreting what that means. But when you add hand gestures, the accuracy improves.”

For example, a crisis team tracking a hurricane might say, “Let’s look at the population distribution here in the southeast” and gesture at a map on display, circling the region of interest.

The computer can interpret the combination of voice and gesture commands, zoom in the area and act on the next series of queries. The team member might gesture to indicate the possible track of the hurricane and ask the computer to display what areas would be most affected by flooding if the storm tracks north or south of the current location.

The team is also developing software to let field workers manipulate GIS software on tablet PCs and PDAs using styluses.

Via IFTF’s Future Now GovTech.

Related: “Data-rich” environment for scientific discovery.